TrustyFAQ

AI-powered FAQ management system solving the real problem of team leads being overwhelmed with repetitive questions. Built with semantic search and intelligent responses to provide instant answers, eliminating the need to scroll through traditional FAQ lists.

The Problem

During my time at Mercor, I observed team leads constantly being bombarded with FAQ-type questions, even when comprehensive FAQs already existed. The root issue wasn't lack of information - it was user experience friction. People don't want to scroll through long FAQ lists, they want immediate answers. Traditional keyword search often returns irrelevant results, and the context switching required to find answers disrupts workflow.

My Solution & Technical Details

I designed TrustyFAQ as an AI-powered FAQ management system that eliminates scrolling fatigue through semantic search and intelligent responses. The system uses vector embeddings to understand user intent and provides instant, relevant answers as users type. This reduces team lead interruptions while making knowledge more accessible through natural language queries.

Key Technical Implementations:

- Built Next.js 14 frontend with App Router and shadcn/ui components for modern UI

- Implemented FastAPI backend with automatic documentation and type hints

- Integrated Supabase with PostgreSQL and pgvector extension for vector search

- Created semantic search using vector embeddings for similarity matching

- Implemented Google Gemini AI for intelligent content generation and responses

- Built multi-tenant architecture with Supabase Row Level Security

- Added Celery task queue with Redis for background processing and caching

- Designed real-time search with debounced queries and React Query caching

- Created workspace isolation for secure multi-tenant data access

- Implemented AI-powered content enhancement and smart categorization

- Built responsive design optimized for desktop, tablet, and mobile

- Added public FAQ pages for external access and sharing

Project Screenshots

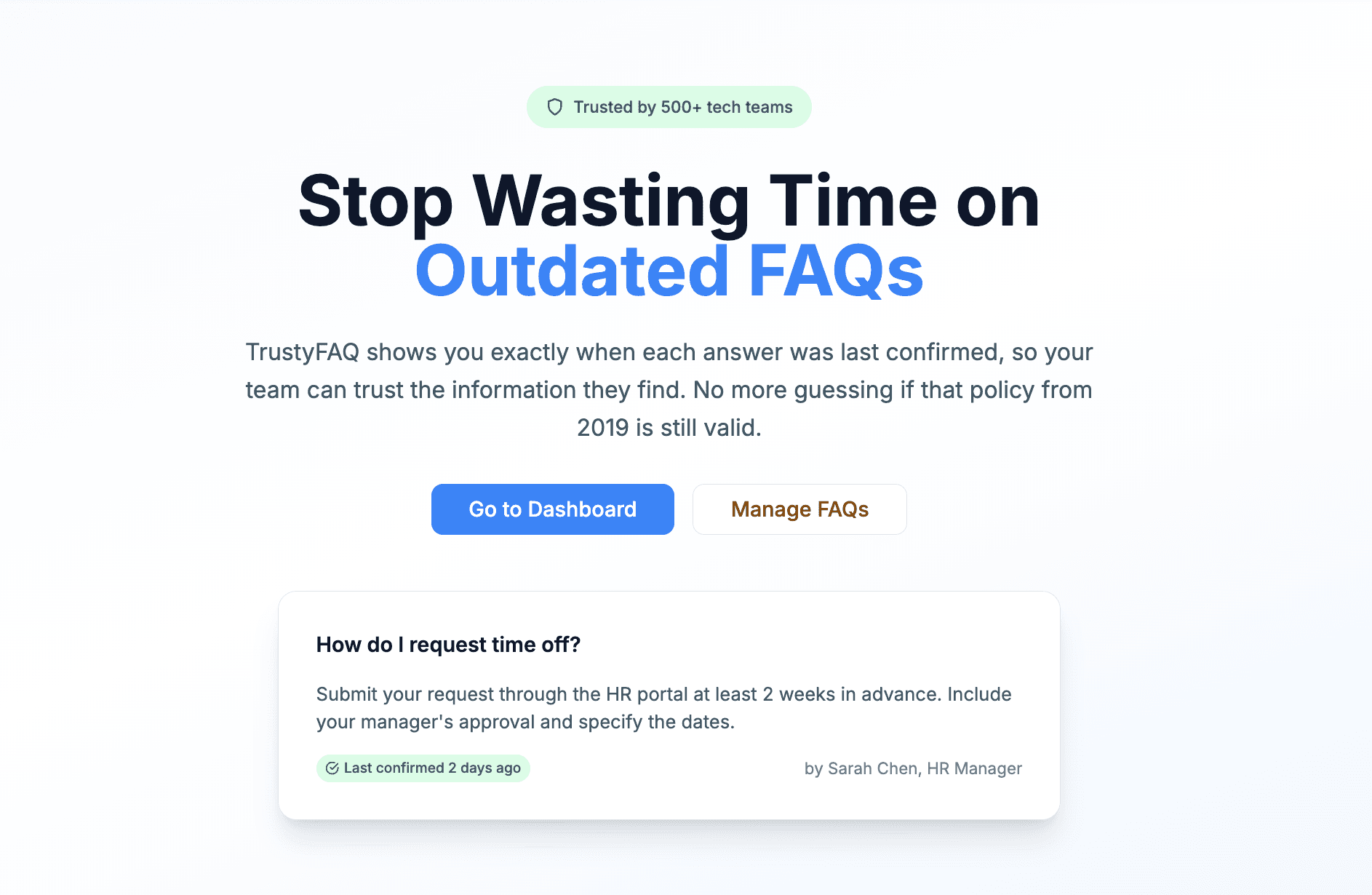

The main TrustyFAQ interface showing the modern design and search functionality

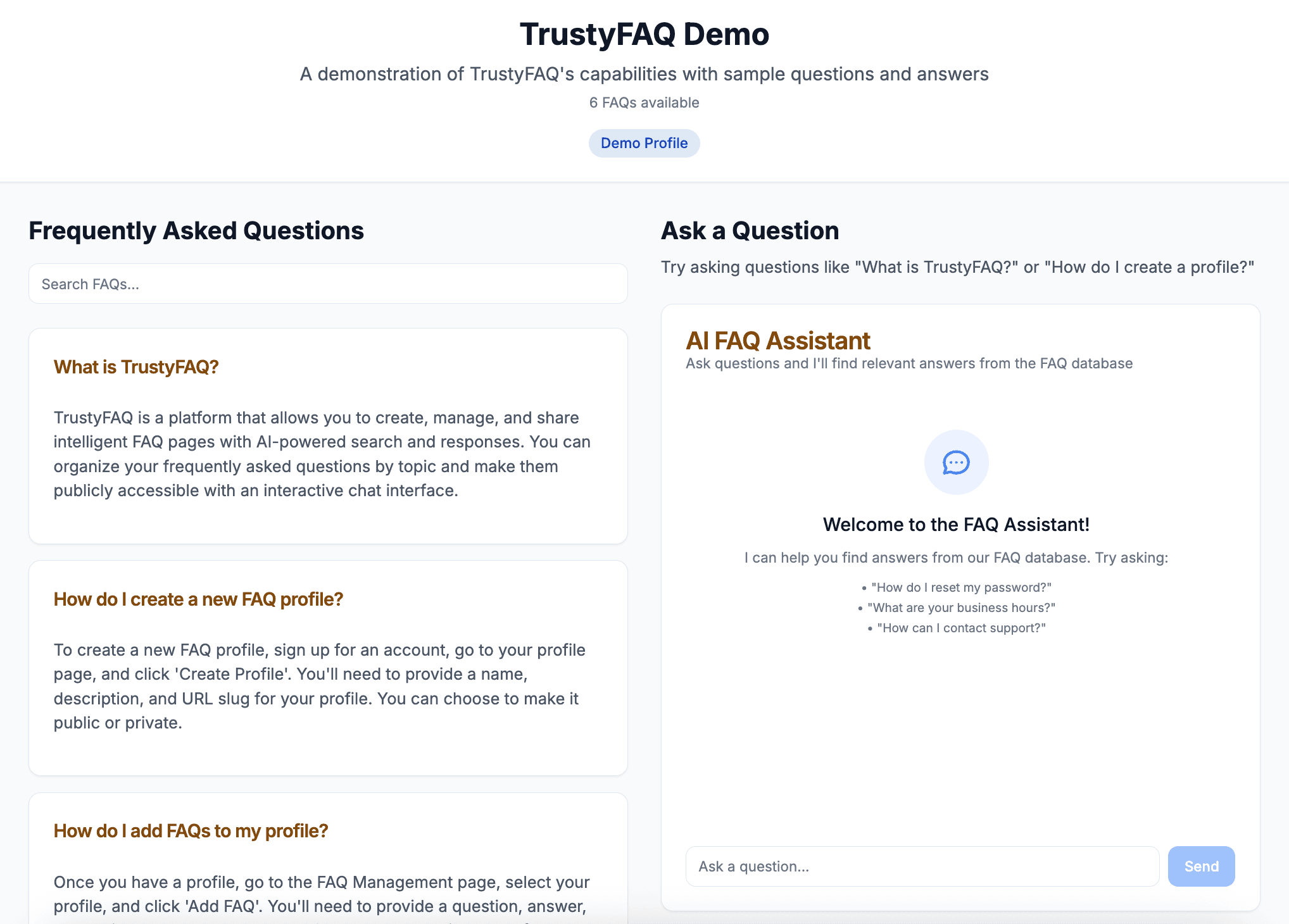

Demo interface showcasing the AI-powered search and response capabilities

Outcomes & Results

- Successfully implemented vector-based semantic search that eliminates scrolling through FAQ lists

- Built AI-powered system that provides instant answers to natural language queries

- Created multi-tenant SaaS platform with secure data isolation between organizations

- Implemented intelligent content generation using Google Gemini AI

- Achieved high-performance search with pgvector and optimized database queries

- Built modern, responsive interface that works seamlessly across all devices

- Demonstrated full-stack development with modern Python and TypeScript frameworks

- Created system that reduces repetitive questions while improving knowledge accessibility

AI Integration & Code Implementation

🤖 Google Gemini AI Integration

Model Configuration

- Model: Google Gemini AI

- Max Tokens: 300

- Temperature: 0.3

Key Features

- Content generation and enhancement

- Intelligent response generation

- Semantic search with vector embeddings

- Context-aware search results

🔧 API Implementation Details

Endpoint

/api/faqs/searchError Handling

Comprehensive error handling with fallback responses and rate limiting

Caching Strategy

Redis caching for search results and AI responses

💡 Prompt Engineering

You are an intelligent FAQ assistant. Based on the following FAQ content and user query, provide a helpful, accurate response. If the query doesn't match the FAQ content, suggest related topics or ask for clarification.

Interested in this project?

Let's discuss how we can work together on similar challenges.